Debiasing Word Embeddings

Image courtesy Salesforce

Image courtesy Salesforce

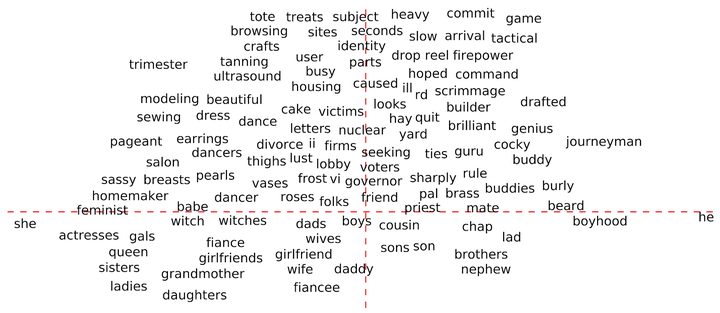

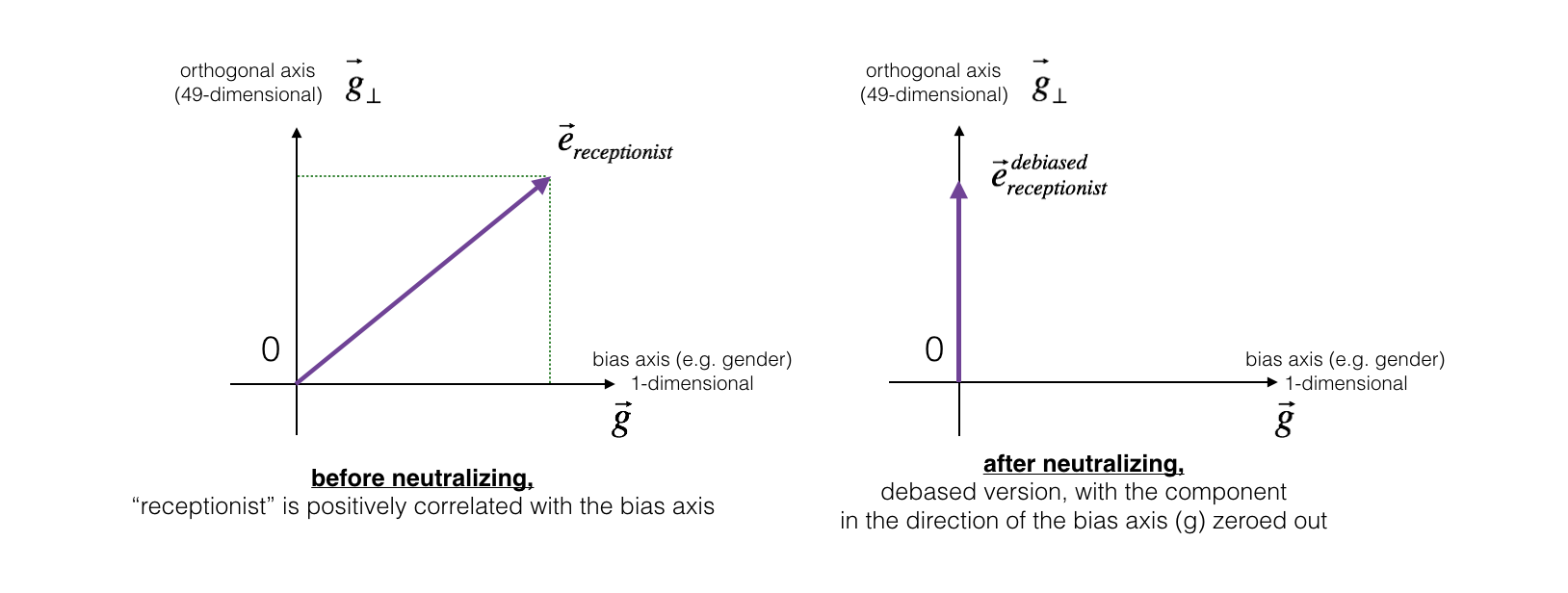

Ths mini-project aims to explore pre-trained Glove word embeddings for potential gender bias. We extract a gender-direction in the vector space of the word embeddings. We then see how some words are incorrectly biased with respect to this gender direction, e.g. nurse is closer to female than male.

We attempt the debiasing methods offered here. We show how such a simple debiasing method can reduce the bias.

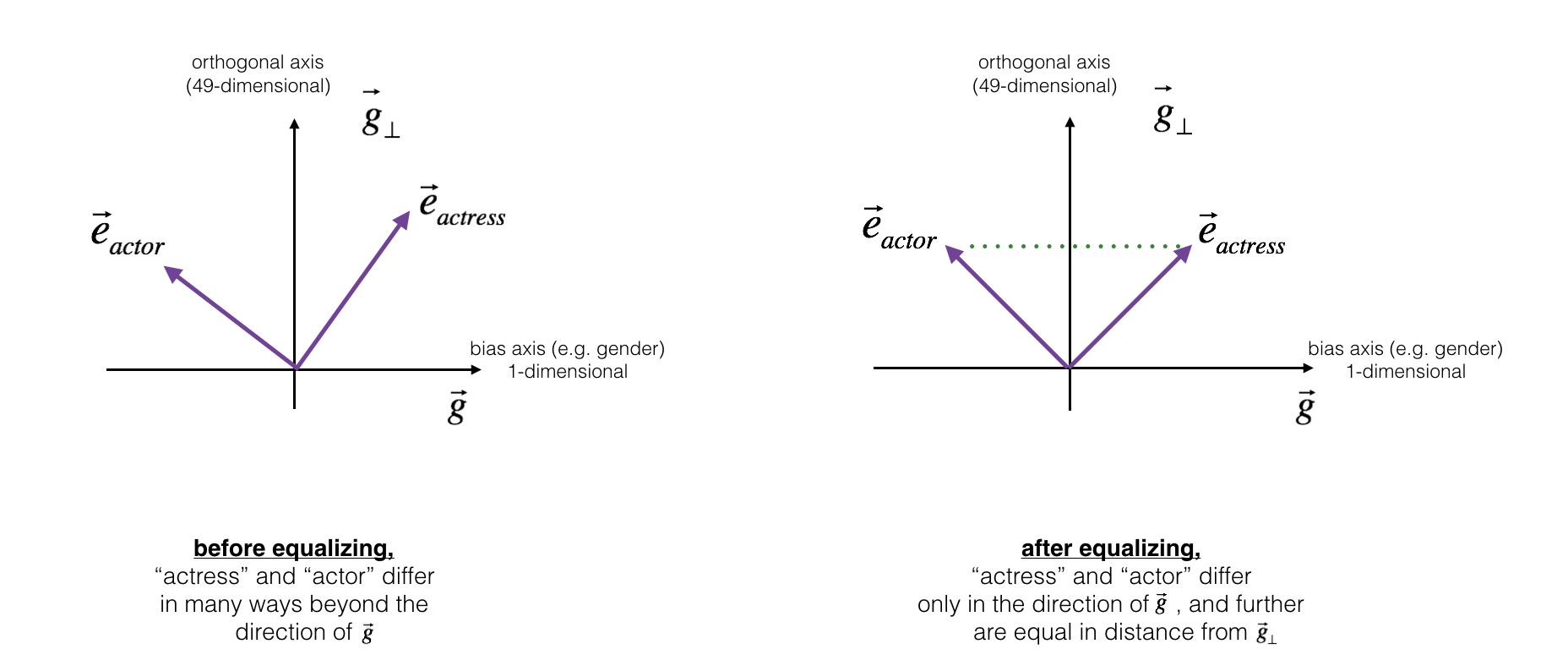

We also explore equalization, that aims to ensure that gender-specific words like actor and actress only differ with respect to the gender direction. This ensures that there is no indirect bias, e.g. actress is closer to babysit than actor, even though we may neutralize the bias that might be present in babysit.

This project was completed as part of the Coursera course on Sequential Models.