Generating Counterfactuals for Causal Fairness

Notions of causal fairness for algorithmic decision making systems crucially rely on estimating whether an individual (or a group of individuals) and their counterfactual individual (or groups of individuals) would receive the same decision(s). Central to this estimation is the ability to compute the features of the counterfactual individual, given the features of any individual. Recent works have proposed to apply deep generative models like GANs and VAEs over real-world datasets to compute counterfactual datasets at the level of both individuals and groups. In this paper, we explore the challenges with computing accurate counterfactuals, particularly over heterogenous tabular data that is often used in algorithmic decision making systems. We also investigate the implicit assumptions when applying deep generative models to compute counterfactual datasets.

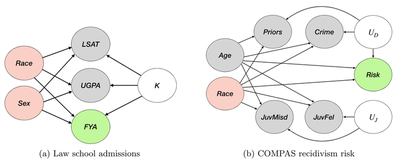

Simplifying causal assumptions

Looking at the fairness and causality literature, we can actually simplify the causal graphs often assumed in the literature.

So, we can almost always assume a simpler causal graph to work with. The main considerations are:

- Sensitive features are root nodes.

- Sensitive features do not influence exogenous variables.

- Some observed features can be affected by sensitive features.

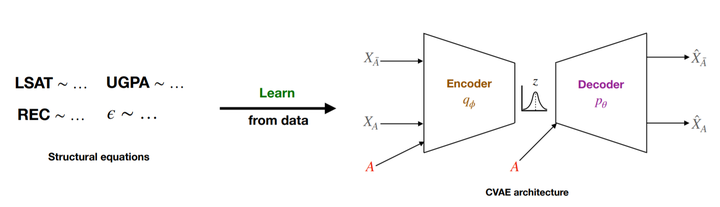

Using a Conditional VAE to generate counterfactuals

We first model the data likelihood using a Conditional VAE, conditioned on the sensitive features. We minimize the loss:

- Infer latent Z from observed features using encoder

- Perform intervention, changing sensitive conditional variable

- Perform deduction using the Z and changed conditional with decoder

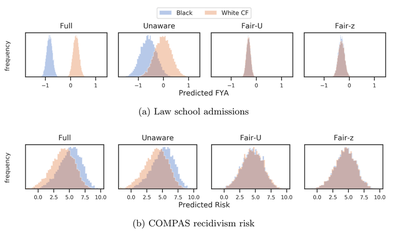

We can use CVAE to audit for counterfactual fairness

We show that we can use the CVAE model to audit prediction models for counterfactual fairness.

Note, how the CVAE generated counterfactuals provide auditing results very close to the oracle model

We can use CVAE to train counterfactually fair predictors

We next show how we can actually use the latent Z of the CVAE to train prediction models that are counterfactually fair.

Note, FlipTest has no immediate way to estimate latent Z from input data, so cannot be directly used here.

CVAE-based Fair-Z is able to give very similar prediction results compared to the actual

This project was done in collaboration with Preethi Lahoti, Junaid Ali, Till Speicher, Isabel Valera and Krishna Gummadi.